Effective haptic feedback in virtual reality (VR) is an essential element for creating convincing immersive experiences. Tools for designing vibrotactile feedback keep evolving but often require expert knowledge and rarely support direct manipulation to design feedback-action mappings within the VR environment.

Weirding Haptics is a novel concept that supports fast-prototyping by leveraging the user’s voice to design such feedback while manipulating virtual objects in-situ. To study our concept, we built a VR design tool that enables users to design tactile experiences using their voice while manipulating objects, provides a set of modifiers for fine-tuning the created experiences in VR, and allows to rapidly compare various experiences by feeling them. This tool is available on Github.

Results from a validation study including 8 novice hapticians show they could vocalize experiences and refine their designs with the fine-tuning modifiers to match their intentions. This work uncovered design implications for fast-prototyping of vibrotactile feedback in VR.

2021

HapticsDesign ToolVirtual Reality

See also bARefoot Squish this Tactjam CollabJam Markpad BodyStylus BodyLoci

Channelling the Inner Voice

Weirding Haptics takes inspiration from the Weirding Module, a sonified weapon from the David Lynch's 1984 Dune movie. Soldiers channel the power of their voice to control weapons and produce various kinds of outcome depending on their performance.

With Weirding Haptics, our main goal was to provide interaction means for novice hapticians such as video game programmers, students, or UX designers to design convincing haptics experiences for specific contexts. Since anybody can use their voice to produce a large variety of sounds and replicate specific feelings with it, we proposed to leverage this input means for designing inside a virtual environment.

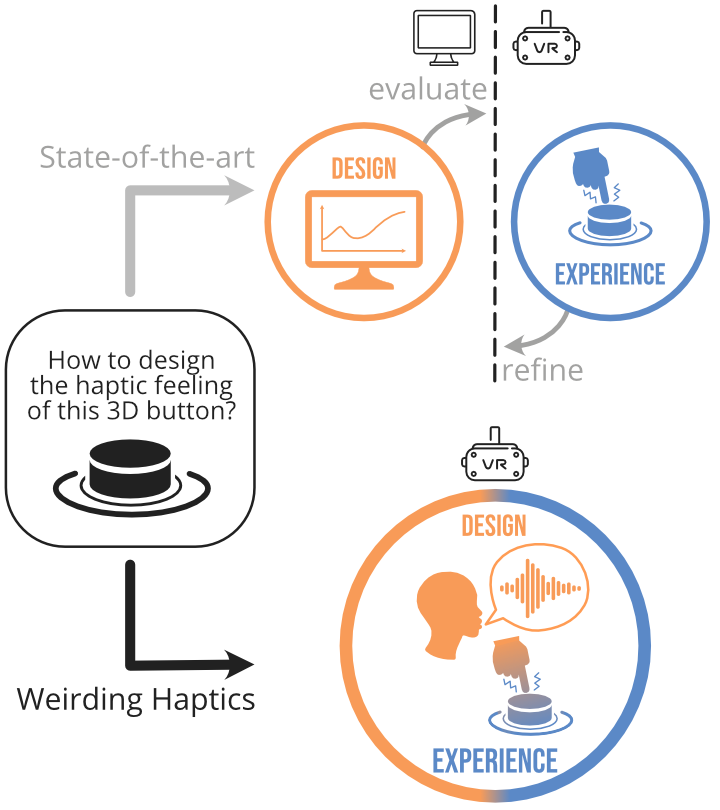

This approach contrasts with state-of-the-art design tools such as the Haptic Composer or the Lofelt Studio as designers do not need to control abstract parameters to produce efficient experiences, nor do they need to have knowledge in haptic design by default. Furthermore, designers can stay immersed in the virtual environment when designing instead of switching back and forth between a workstation and the environment (see picture on the right)

Making a Lightsaber Tangible: an Example

Can you hear the sound of a lightsaber humming? Could you reproduce this sound with your voice? Then you can make a virtual lightsaber tangible with Weirding Haptics!

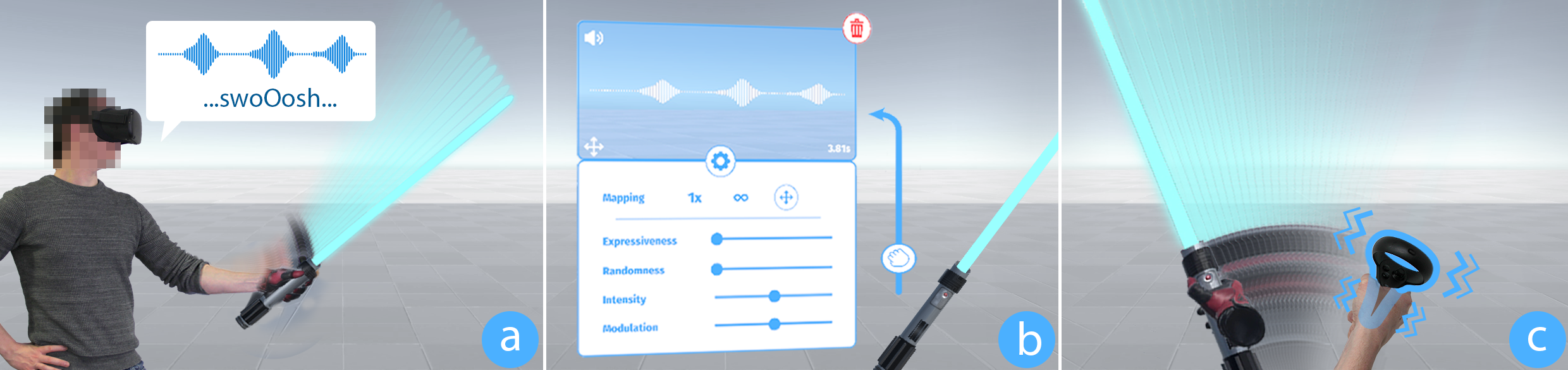

Check out the example depicted on the pictures above. The designer manipulates the lightsaber on picture (a) and vocalizes the vibrations it should produce at specific intervals based on the movement performed. On picture (b), a vocalization layer displays all information about the designer's vocalizations, i.e., waveform of the vocalization and the type of mapping inferred between the vocalization and the movement performed. On picture (c), the designer experiences the vibrotactile feedback just produced to experience whether it matches the original intention. That's all one needs to do to produce vibrotactile feedback mapped to a user's actions!

Adapting, Fine-Tuning, Correcting with the Vocalization Layer

One cannot expect novice hapticians to have trained voices that would produce precise vocalizations especially when designing for vibrotactile feedback. Playing raw vocalizations in vibrotactile actuators is therefore not an option. We rather extract amplitudes and frequencies at a given interval from the vocalizations and play them as feedback based on identified spatio-temporal mappings.

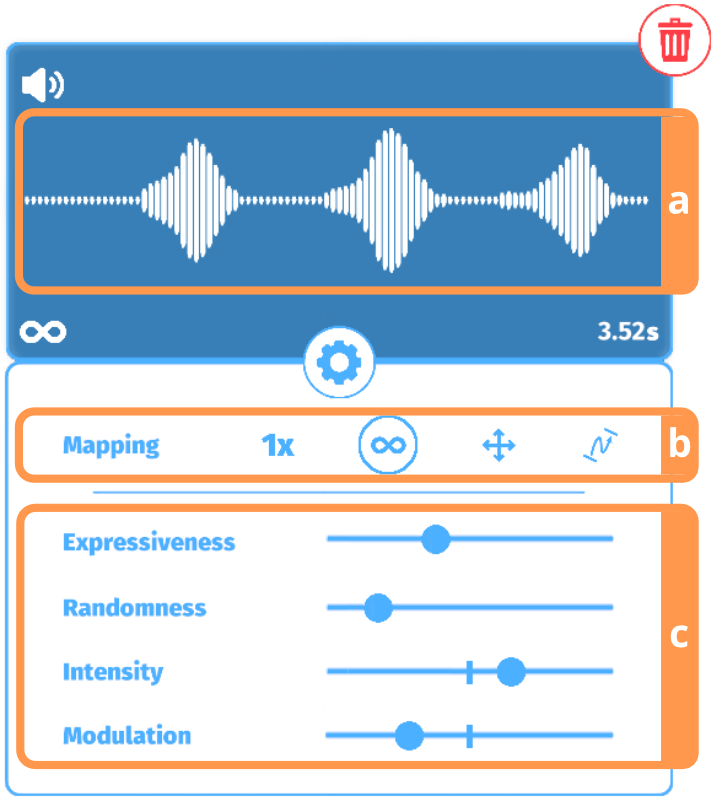

As you can see on the right-hand picture (b), the Weirding Haptics design tool proposes four different spatio-temporal mappings: instantaneous, continuous, motion, and positional. An instantaneous mapping plays the vibrations only once, while a continuous one plays them indefinitely. A motion mapping builds on a continuous one but varies amplitudes and frequencies based on the movement speed. A positional mapping associate vibrations to spatial landmarks. While a mapping is inferred after recording a vocalization, designers can switch between them through the vocalization layer.

To correct for mistakes performed while recording a vocalization, or simply fine-tune it to reach a better result without recording a new one again, a vocalization layer proposes four fine-tune modifiers, see right-hand picture (c).

Validating the Weirding Haptics concept

We ran a validation study including 8 novice hapticians to validate our concept. Their task was open-ended: we proposed a list of virtual objects to interact with and participants had to design the vibrotactile feedback they wanted with a given object and action. The set of objects proposed various types of interactions spanning from stroking, shaking, or sliding. We asked participants to rank each of the experiences produced based on their initial intention.

Overall, participants felt they could quickly produce convincing vibrotactile feedback and did so with little training (approx. 15 minutes, with some participants being completely inexperienced with VR).

Based on their remarks and our observations during the study, we were able to draw design implications for in-situ prototyping of vibrotactile feedback in VR. More details can be found in the paper.

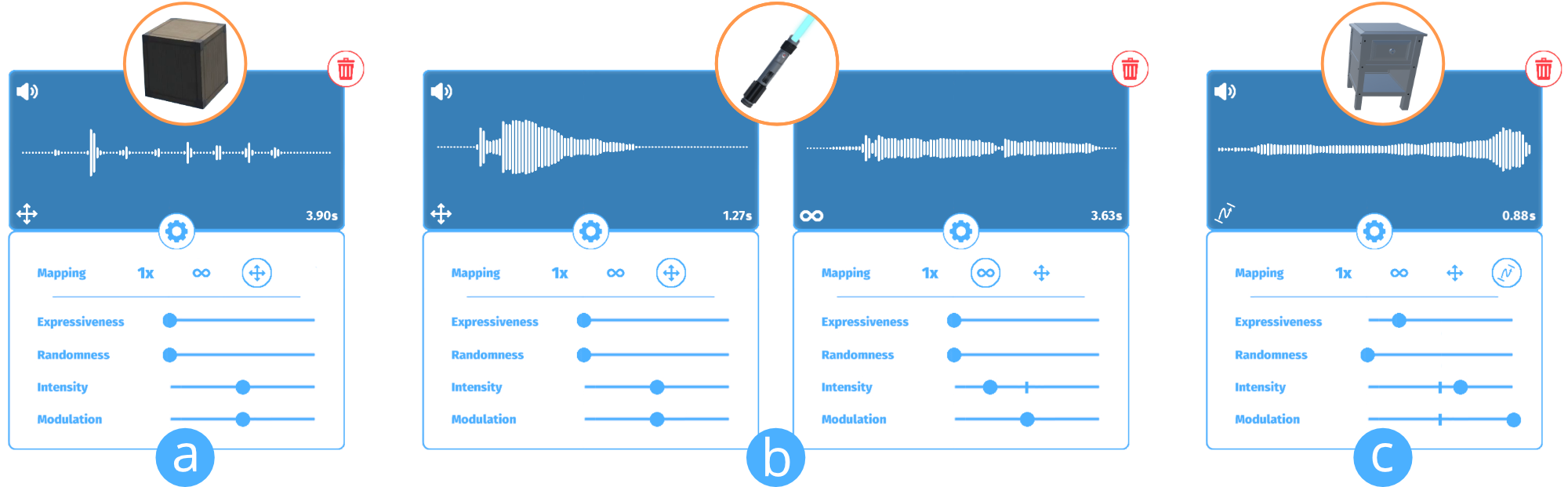

Examples of vibrotactile feedback designed by participants of the study (from left to right): a rock tumbling inside a box, the humming and waving of a lightsaber, a drawer opening.

Weirding Haptics: In-Situ Prototyping of Vibrotactile Feedback in Virtual Reality through Vocalization Bruno Fruchard, Donald Degraen, Frederik Smolders, Emmanouil Potetsianakis, Seref Güngör, Antonio Krüger, Jürgen Steimle UIST'21: The 34th Annual ACM Symposium on User Interface Software and Technology